vad 0.0.7+1  vad: ^0.0.7+1 copied to clipboard

vad: ^0.0.7+1 copied to clipboard

VAD is a cross-platform Voice Activity Detection system, allowing Flutter applications to seamlessly handle various VAD events using Silero VAD v4/v5 models.

VAD #

VAD is a Flutter library for Voice Activity Detection (VAD) across iOS, Android, Web, macOS, Windows, and Linux platforms. This package allows applications to start and stop VAD-based listening and handle various VAD events seamlessly.

Under the hood, the VAD Package uses direct FFI bindings to ONNX Runtime for native platforms (iOS, Android, macOS, Windows, Linux) and dart:js_interop for Web. All platforms utilize the Silero VAD models with full-feature parity across platforms.

The package provides a simple API to start and stop VAD listening, configure VAD parameters, and handle VAD events such as speech start, speech end, errors, and misfires.

Note: Echo cancellation is not available on Windows and Linux platforms due to limitations in the underlying audio capture library.

Table of Contents #

- VAD

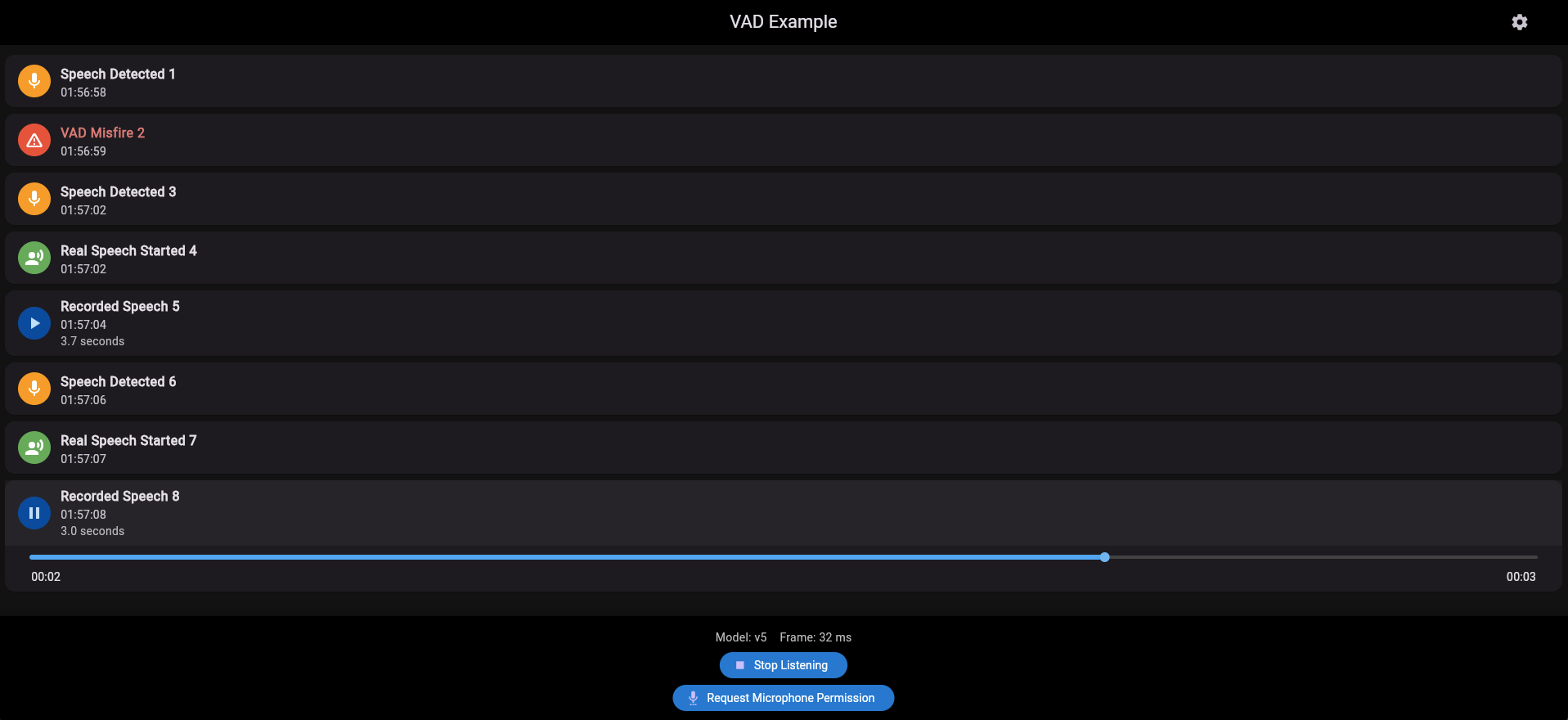

Live Demo #

Check out the VAD Package Example App to see the VAD Package in action on the Web platform.

Features #

-

Cross-Platform Support: Works seamlessly on iOS, Android, Web, macOS, Windows, and Linux.

-

Event Streams: Listen to events such as speech start, real speech start, speech end, speech misfire, frame processed, and errors.

-

Silero V4 and V5 Models: Supports both Silero VAD v4 and v5 models.

-

16KB Page Size Support: Native Android libraries are properly aligned for 16KB page sizes, meeting Google Play requirements for Android 15+ devices.

-

Custom Audio Streams: Provide your own audio stream for advanced use cases like custom recording configurations or processing audio from non-microphone sources.

Getting Started #

Prerequisites #

Before integrating the VAD Package into your Flutter application, ensure that you have the necessary configurations for each target platform.

Web

To use VAD on the web, include the following scripts within the head and body tags respectively in the web/index.html file to load the necessary VAD libraries:

Option 1: Using CDN (Default)

<head>

...

<script src="https://cdn.jsdelivr.net/npm/onnxruntime-web@1.22.0/dist/ort.wasm.min.js"></script>

...

</head>

Option 2: Using Local Assets (Offline/Self-hosted) If you prefer to bundle all assets locally instead of using CDN:

-

Download the required files to your

assets/directory: -

Update your

web/index.html:

<head>

...

<script src="assets/ort.wasm.min.js"></script>

...

</head>

- Configure your VAD handler to use local assets:

await vadHandler.startListening(

baseAssetPath: '/assets/', // For VAD model files

onnxWASMBasePath: '/assets/', // For ONNX Runtime WASM files

// ... other parameters

);

You can also refer to the VAD Example App for a complete example.

Tip: Enable WASM multithreading (SharedArrayBuffer) for performance improvements

-

For Production, send the following headers in your server response:

Cross-Origin-Embedder-Policy: require-corp Cross-Origin-Opener-Policy: same-origin -

For Local, refer to the workaround applied in the GitHub Pages demo page for the example app. It is achieved with the inclusion of enable-threads.js and loading it in the web/index.html#L24 file in the example app.

iOS

For iOS, you need to configure microphone permissions and other settings in your Info.plist file.

- Add Microphone Usage Description: Open

ios/Runner/Info.plistand add the following entries to request microphone access:

<key>NSMicrophoneUsageDescription</key>

<string>This app needs access to the microphone for Voice Activity Detection.</string>

- Configure Build Settings: Ensure that your

Podfileincludes the necessary build settings for microphone permissions:

post_install do |installer|

installer.pods_project.targets.each do |target|

flutter_additional_ios_build_settings(target)

target.build_configurations.each do |config|

config.build_settings['GCC_PREPROCESSOR_DEFINITIONS'] ||= [

'$(inherited)',

'PERMISSION_MICROPHONE=1',

]

end

end

end

Android

For Android, configure the required permissions and build settings in your AndroidManifest.xml and build.gradle files.

- Add Permissions: Open

android/app/src/main/AndroidManifest.xmland add the following permissions:

<uses-permission android:name="android.permission.RECORD_AUDIO" />

<uses-permission android:name="android.permission.INTERNET"/>

<uses-permission android:name="android.permission.MODIFY_AUDIO_SETTINGS"/>

- Configure Build Settings: Open

android/app/build.gradleand add the following settings:

android {

compileSdkVersion 34

...

}

Installation #

Add the VAD Package to your pubspec.yaml dependencies:

dependencies:

flutter:

sdk: flutter

vad: ^0.0.5

permission_handler: ^11.3.1

Then, run flutter pub get to fetch the packages.

Usage #

Example #

Below is a simple example demonstrating how to integrate and use the VAD Package in a Flutter application. For a more detailed example, check out the VAD Example App in the GitHub repository.

// main.dart

import 'package:flutter/material.dart';

import 'package:permission_handler/permission_handler.dart';

import 'package:vad/vad.dart';

void main() async {

WidgetsFlutterBinding.ensureInitialized();

runApp(const MyApp());

}

class MyApp extends StatelessWidget {

const MyApp({super.key});

@override

Widget build(BuildContext context) {

return MaterialApp(

home: Scaffold(

appBar: AppBar(title: const Text("VAD Example")),

body: const MyHomePage(),

),

);

}

}

class MyHomePage extends StatefulWidget {

const MyHomePage({super.key});

@override

State<MyHomePage> createState() => _MyHomePageState();

}

class _MyHomePageState extends State<MyHomePage> {

final _vadHandler = VadHandler.create(isDebug: true);

bool isListening = false;

final List<String> receivedEvents = [];

@override

void initState() {

super.initState();

_setupVadHandler();

}

void _setupVadHandler() {

_vadHandler.onSpeechStart.listen((_) {

debugPrint('Speech detected.');

setState(() {

receivedEvents.add('Speech detected.');

});

});

_vadHandler.onRealSpeechStart.listen((_) {

debugPrint('Real speech start detected (not a misfire).');

setState(() {

receivedEvents.add('Real speech start detected (not a misfire).');

});

});

_vadHandler.onSpeechEnd.listen((List<double> samples) {

debugPrint('Speech ended, first 10 samples: ${samples.take(10).toList()}');

setState(() {

receivedEvents.add('Speech ended, first 10 samples: ${samples.take(10).toList()}');

});

});

_vadHandler.onFrameProcessed.listen((frameData) {

final isSpeech = frameData.isSpeech;

final notSpeech = frameData.notSpeech;

final firstFewSamples = frameData.frame.take(5).toList();

debugPrint('Frame processed - Speech probability: $isSpeech, Not speech: $notSpeech');

debugPrint('First few audio samples: $firstFewSamples');

// You can use this for real-time audio processing

});

_vadHandler.onVADMisfire.listen((_) {

debugPrint('VAD misfire detected.');

setState(() {

receivedEvents.add('VAD misfire detected.');

});

});

_vadHandler.onError.listen((String message) {

debugPrint('Error: $message');

setState(() {

receivedEvents.add('Error: $message');

});

});

}

@override

void dispose() {

_vadHandler.dispose(); // Note: dispose() is called without await in Widget.dispose()

super.dispose();

}

@override

Widget build(BuildContext context) {

return Padding(

padding: const EdgeInsets.all(16),

child: Column(

mainAxisSize: MainAxisSize.min,

children: [

ElevatedButton.icon(

onPressed: () async {

if (isListening) {

await _vadHandler.stopListening();

} else {

await _vadHandler.startListening();

}

setState(() {

isListening = !isListening;

});

},

icon: Icon(isListening ? Icons.stop : Icons.mic),

label: Text(isListening ? "Stop Listening" : "Start Listening"),

style: ElevatedButton.styleFrom(

minimumSize: const Size(double.infinity, 48),

),

),

const SizedBox(height: 8),

TextButton.icon(

onPressed: () async {

final status = await Permission.microphone.request();

debugPrint("Microphone permission status: $status");

},

icon: const Icon(Icons.settings_voice),

label: const Text("Request Microphone Permission"),

style: TextButton.styleFrom(

minimumSize: const Size(double.infinity, 48),

),

),

const SizedBox(height: 16),

Expanded(

child: ListView.builder(

itemCount: receivedEvents.length,

itemBuilder: (context, index) {

return ListTile(

title: Text(receivedEvents[index]),

);

},

),

),

],

),

);

}

}

Explanation of the Example

- Initialization:

-

Initializes the

VadHandlerwith debugging enabled. -

Sets up listeners for various VAD events (

onSpeechStart,onRealSpeechStart,onSpeechEnd,onFrameProcessed,onVADMisfire,onError).

- Permissions:

- Requests microphone permission when the "Request Microphone Permission" button is pressed.

- Listening Controls:

-

Toggles listening on and off with the "Start Listening"/"Stop Listening" button.

-

Configures the audio player to mix with other audio sources on iOS.

- Event Handling:

-

Displays received events in a list view.

-

Updates the UI based on the received events.

Note: For Real-time Audio Processing, listen to the onFrameProcessed events to access raw audio frames and speech probabilities as they're processed.

VadHandler API #

Methods #

create

Creates a new instance of the VadHandler with optional debugging enabled with the isDebug parameter. Model files are loaded from CDN by default but can be customized using the baseAssetPath parameter in startListening.

startListening

Starts the VAD with configurable parameters. Returns a Future<void> that completes when the VAD session has started.

Notes:

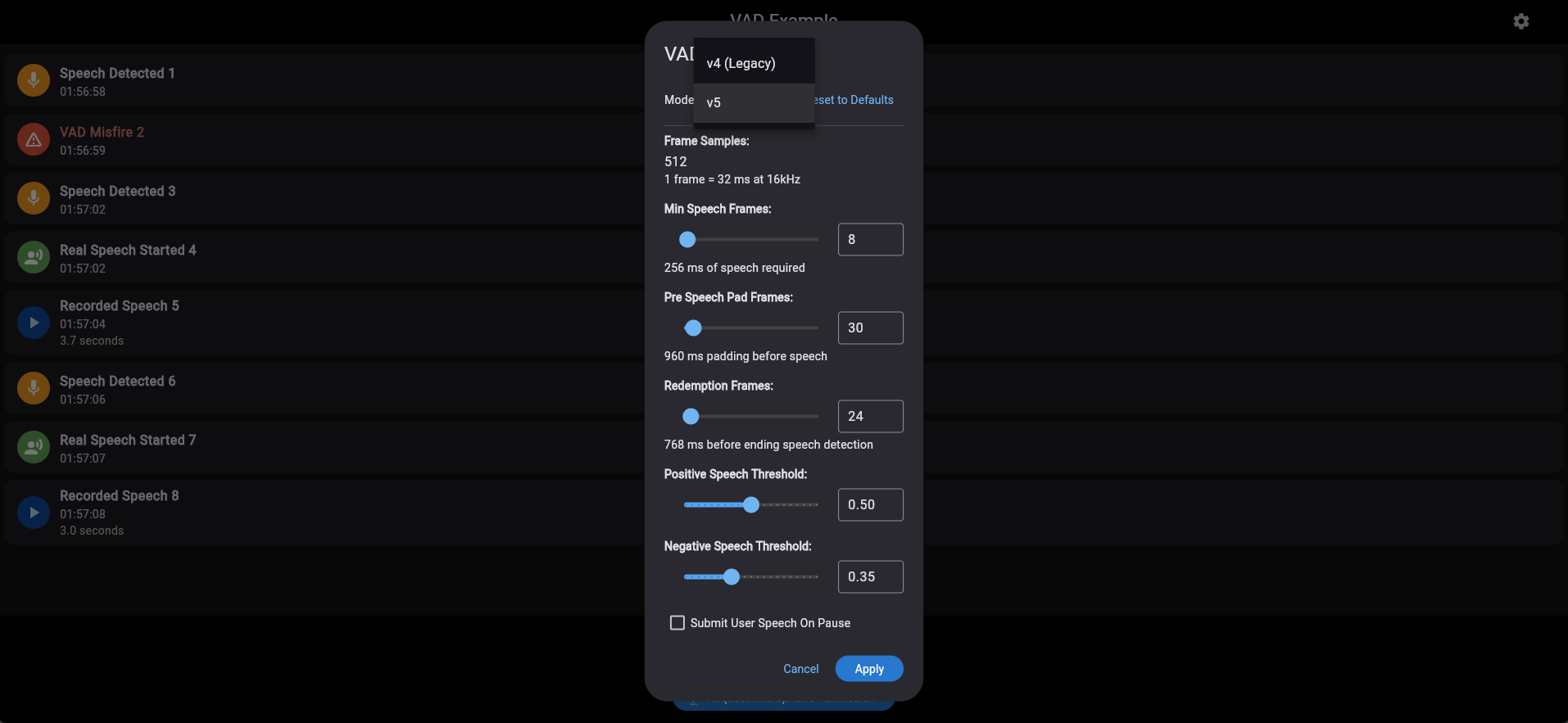

- The sample rate is fixed at 16kHz, which means when using legacy model with default frameSamples value, one frame is equal to 1536 samples or 96ms.

- For Silero VAD v5 model, frameSamples must be set to 512 samples unlike the previous version, so one frame is equal to 32ms.

modelparameter can be set to 'legacy' or 'v5' to use the respective VAD model. Default is 'legacy'.baseAssetPathspecifies the base URL/path for VAD model files (.onnx). Defaults to CDN (https://cdn.jsdelivr.net/npm/@keyurmaru/vad@0.0.1/) but can be overridden for custom hosting.onnxWASMBasePathspecifies the base URL/path for onnxruntime WASM files. Defaults to CDN (https://cdn.jsdelivr.net/npm/onnxruntime-web@1.22.0/dist/) but can be overridden for custom hosting.recordConfigallows you to provide custom recording configuration for native platforms (iOS, Android, macOS, Windows, Linux). If not provided, default configuration with 16kHz sample rate, PCM16 encoding, echo cancellation, auto gain, and noise suppression will be used.

Future<void> startListening({

double positiveSpeechThreshold = 0.5,

double negativeSpeechThreshold = 0.35,

int preSpeechPadFrames = 1,

int redemptionFrames = 8,

int frameSamples = 1536,

int minSpeechFrames = 3,

bool submitUserSpeechOnPause = false,

String model = 'legacy',

String baseAssetPath = 'https://cdn.jsdelivr.net/npm/@keyurmaru/vad@0.0.1/',

String onnxWASMBasePath = 'https://cdn.jsdelivr.net/npm/onnxruntime-web@1.22.0/dist/',

RecordConfig? recordConfig,

Stream<Uint8List>? audioStream,

});

Custom Audio Stream:

audioStreamallows you to provide your own audio stream instead of using the built-in recorder. When provided, VadHandler will process audio from this stream instead of creating its own AudioRecorder. The stream should provide PCM16 audio data at 16kHz sample rate, mono channel. This is useful for:- Custom recording configurations not supported by the default recorder

- Processing audio from non-microphone sources (files, network streams, etc.)

- Integration with existing audio pipelines in your application

Example usage with custom audio stream:

import 'dart:typed_data';

import 'package:record/record.dart';

// Create your custom audio stream provider

class CustomAudioProvider {

AudioRecorder? _recorder;

Stream<Uint8List>? _stream;

Future<void> initialize() async {

_recorder = AudioRecorder();

const config = RecordConfig(

encoder: AudioEncoder.pcm16bits,

sampleRate: 16000,

numChannels: 1,

);

_stream = await _recorder!.startStream(config);

}

Stream<Uint8List>? get audioStream => _stream;

Future<void> dispose() async {

await _recorder?.dispose();

}

}

// Use it with VadHandler

final provider = CustomAudioProvider();

await provider.initialize();

await vadHandler.startListening(

audioStream: provider.audioStream, // Pass your custom stream

// ... other parameters

);

See the example app's CustomAudioStreamProvider for a complete implementation.

stopListening

Stops the VAD session. Returns a Future<void> that completes when the VAD session has stopped.

Future<void> stopListening();

pauseListening

Pauses VAD-based listening without fully stopping the audio stream. Returns a Future<void> that completes when the VAD session has been paused.

Note: If submitUserSpeechOnPause was enabled, any in-flight speech will immediately be submitted (forceEndSpeech()).

Future<void> pauseListening();

dispose

Disposes the VADHandler and closes all streams. Returns a Future<void> that completes when all resources have been disposed.

Future<void> dispose();

Events #

Available event streams to listen to various VAD events:

onSpeechEnd

Emitted when speech end is detected, providing audio samples.

onSpeechStart

Emitted when speech start is detected.

onRealSpeechStart

Emitted when actual speech is confirmed (exceeds minimum frames threshold).

onVADMisfire

Emitted when speech was initially detected but didn't meet the minimum speech frames threshold.

onFrameProcessed

Emitted after each audio frame is processed, providing speech probabilities and raw audio data.

onError

Emitted when an error occurs.

Permissions #

Proper handling of microphone permissions is crucial for the VAD Package to function correctly on all platforms.

iOS #

-

Configuration: Ensure that

NSMicrophoneUsageDescriptionis added to yourInfo.plistwith a descriptive message explaining why the app requires microphone access. -

Runtime Permission: Request microphone permission at runtime using the

permission_handlerpackage.

Android #

-

Configuration: Add the

RECORD_AUDIO,MODIFY_AUDIO_SETTINGS, andINTERNETpermissions to yourAndroidManifest.xml. -

Runtime Permission: Request microphone permission at runtime using the

permission_handlerpackage.

Web #

- Browser Permissions: Microphone access is managed by the browser. Users will be prompted to grant microphone access when the VAD starts listening.

Cleaning Up #

To prevent memory leaks and ensure that all resources are properly released, always call the dispose method on the VadHandler instance when it's no longer needed. Since dispose() is now async, use await when possible:

// When called from an async context

await vadHandler.dispose();

// In Widget.dispose() (synchronous context), call without await

vadHandler.dispose();

Troubleshooting #

iOS Issues #

TestFlight Build Error: "Failed to lookup symbol 'OrtGetApiBase'"

If you encounter this error when uploading to TestFlight:

flutter: VAD model initialization failed: Invalid argument(s): Failed to lookup symbol 'OrtGetApiBase': dlsym(RTLD_DEFAULT, OrtGetApiBase): symbol not found

Fix: Configure Xcode build settings to prevent symbol stripping:

- Open Xcode → Runner.xcodeproj

- Select "Targets-Runner" → Build Settings Tab

- Navigate to the Deployment category

- Set "Strip Linked Product" to "No"

- Set "Strip Style" to "Non-Global-Symbols"

Solution found at: https://github.com/gtbluesky/onnxruntime_flutter/issues/24#issuecomment-2419096341

Android Issues #

Echo Cancellation Not Working on Some Android Devices

Some Android devices, particularly Samsung devices (e.g., Samsung S20), may experience issues with echo cancellation not functioning properly, while the same code works fine on other devices (e.g., Lenovo Tab M8).

Fix: Configure Android-specific audio settings in the RecordConfig. The VAD package uses improved audio configuration by default, but you can also customize it:

await _vadHandler.startListening(

recordConfig: const RecordConfig(

encoder: AudioEncoder.pcm16bits,

sampleRate: 16000,

numChannels: 1,

echoCancel: true,

noiseSuppress: true,

autoGain: true,

androidConfig: AndroidRecordConfig(

audioSource: AndroidAudioSource.voiceCommunication,

audioManagerMode: AudioManagerMode.modeInCommunication,

speakerphone: true,

manageBluetooth: true,

),

),

);

This configuration leverages AudioManager.MODE_IN_COMMUNICATION and speakerphone mode along with the android.permission.MODIFY_AUDIO_SETTINGS permission to resolve echo cancellation issues. The VAD package uses these settings by default.

Tested Platforms #

The VAD Package has been tested on the following platforms:

- iOS: Tested on iPhone 15 Pro Max running iOS 18.1.

- Android: Tested on Lenovo Tab M8 running Android 10, Samsung S20 running Android 15.

- Web: Tested on Chrome Mac/Windows/Android/iOS, Safari Mac/iOS.

- macOS: Tested on macOS Sequoia 15.1.

- Windows: Tested on Windows 11.

- Linux: Tested on Ubuntu 24.04.

Contributing #

Contributions are welcome! Please feel free to submit a pull request or open an issue if you encounter any problems or have suggestions for improvements.

Acknowledgements #

Special thanks to Ricky0123 for creating the VAD JavaScript library, the Silero Team for the VAD model used in the library, and Microsoft for the ONNX Runtime that powers the model inference.

License #

This project is licensed under the MIT License. See the LICENSE file for details.

For any issues or contributions, please visit the GitHub repository.