google_mlkit_subject_segmentation 0.0.3  google_mlkit_subject_segmentation: ^0.0.3 copied to clipboard

google_mlkit_subject_segmentation: ^0.0.3 copied to clipboard

A Flutter plugin to use Google's ML Kit Selfie Segmentation API to easily separate the background from users within a scene and focus on what matters.

Google's ML Kit Subject Segmentation for Flutter #

NOTE: This feature is still in Beta, and it is only available for Android. Stay tune for updates in Google's website and request the feature here.

A Flutter plugin to use Google's ML Kit Subject Segmentation to easily separate multiple subjects from the background in a picture, enabling use cases such as sticker creation, background swap, or adding cool effects to subjects.

Subjects are defined as the most prominent people, pets, or objects in the foreground of the image. If 2 subjects are very close or touching each other, they are considered a single subject.

Each pixel of the mask is assigned a float number that has a range between 0.0 and 1.0. The closer the number is to 1.0, the higher the confidence that the pixel represents a subject, and vice versa

On average the latency measured on Pixel 7 Pro is around 200 ms. This API currently only supports static images.

Key capabilities

- Multi-subject segmentation: provides masks and bitmaps for each individual subject, rather than a single mask and bitmap for all subjects combined.

- Subject recognition: subjects recognized are objects, pets, and humans.

- On-device processing: all processing is performed on the device, preserving user privacy and requiring no network connectivity.

PLEASE READ THIS before continuing or posting a new issue:

-

Google's ML Kit was build only for mobile platforms: iOS and Android apps. Web or any other platform is not supported, you can request support for those platform to Google in their repo.

-

This plugin is not sponsored or maintained by Google. The authors are developers excited about Machine Learning that wanted to expose Google's native APIs to Flutter.

-

Google's ML Kit APIs are only developed natively for iOS and Android. This plugin uses Flutter Platform Channels as explained here.

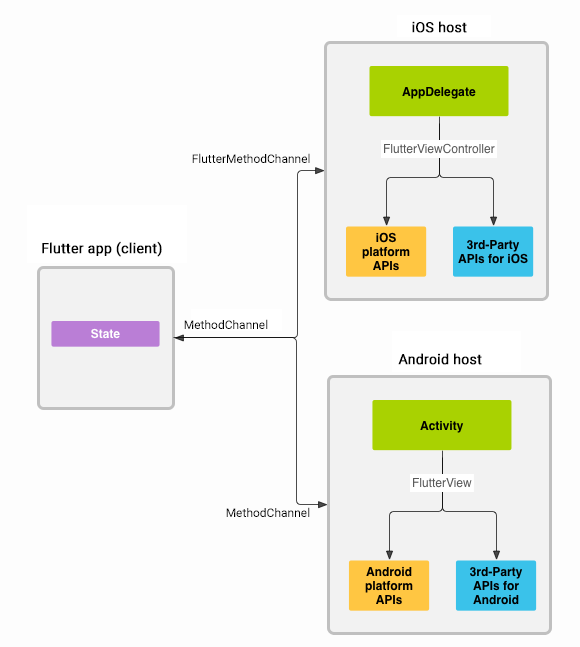

Messages are passed between the client (the app/plugin) and host (platform) using platform channels as illustrated in this diagram:

Messages and responses are passed asynchronously, to ensure the user interface remains responsive. To read more about platform channels go here.

Because this plugin uses platform channels, no Machine Learning processing is done in Flutter/Dart, all the calls are passed to the native platform using

MethodChannelin Android andFlutterMethodChannelin iOS, and executed using Google's native APIs. Think of this plugin as a bridge between your app and Google's native ML Kit APIs. This plugin only passes the call to the native API and the processing is done by Google's API. It is important that you understand this concept when it comes to debugging errors for your ML model and/or app. -

Since the plugin uses platform channels, you may encounter issues with the native API. Before submitting a new issue, identify the source of the issue. You can run both iOS and/or Android native example apps by Google and make sure that the issue is not reproducible with their native examples. If you can reproduce the issue in their apps then report the issue to Google. The authors do not have access to the source code of their native APIs, so you need to report the issue to them. If you find that their example apps are okay and still you have an issue using this plugin, then look at our closed and open issues. If you cannot find anything that can help you then report the issue and provide enough details. Be patient, someone from the community will eventually help you.

Requirements #

iOS #

This feature is still in Beta, and it is only available for Android. Stay tune for updates in Google's website and request the feature here.

Android #

- minSdkVersion: 24

- targetSdkVersion: 35

- compileSdkVersion: 35

You can configure your app to automatically download the model to the device after your app is installed from the Play Store. To do so, add the following declaration to your app's AndroidManifest.xml file:

<application ...>

...

<meta-data

android:name="com.google.mlkit.vision.DEPENDENCIES"

android:value="subject_segment" >

<!-- To use multiple models: android:value="subject_segment,model2,model3" -->

</application>

Usage #

Subject Segmentation #

Create an instance of InputImage

Create an instance of InputImage as explained here.

final InputImage inputImage;

Create an instance of SubjectSegmenter

final options = SubjectSegmenterOptions();

final segmenter = SubjectSegmenter(options: options);

Process image

final result = await segmenter.processImage(inputImage);

Release resources with close()

segmenter.close();

Example app #

Find the example app here.

Contributing #

Contributions are welcome. In case of any problems look at existing issues, if you cannot find anything related to your problem then open an issue. Create an issue before opening a pull request for non trivial fixes. In case of trivial fixes open a pull request directly.