google_mlkit_object_detection 0.15.1  google_mlkit_object_detection: ^0.15.1 copied to clipboard

google_mlkit_object_detection: ^0.15.1 copied to clipboard

A Flutter plugin to use Google's ML Kit Object Detection and Tracking to detect and track objects in an image or live camera feed.

Google's ML Kit Object Detection and Tracking for Flutter #

A Flutter plugin to use Google's ML Kit Object Detection and Tracking to detect and track objects in an image or live camera feed.

PLEASE READ THIS before continuing or posting a new issue:

-

Google's ML Kit was build only for mobile platforms: iOS and Android apps. Web or any other platform is not supported, you can request support for those platform to Google in their repo.

-

This plugin is not sponsored or maintained by Google. The authors are developers excited about Machine Learning that wanted to expose Google's native APIs to Flutter.

-

Google's ML Kit APIs are only developed natively for iOS and Android. This plugin uses Flutter Platform Channels as explained here.

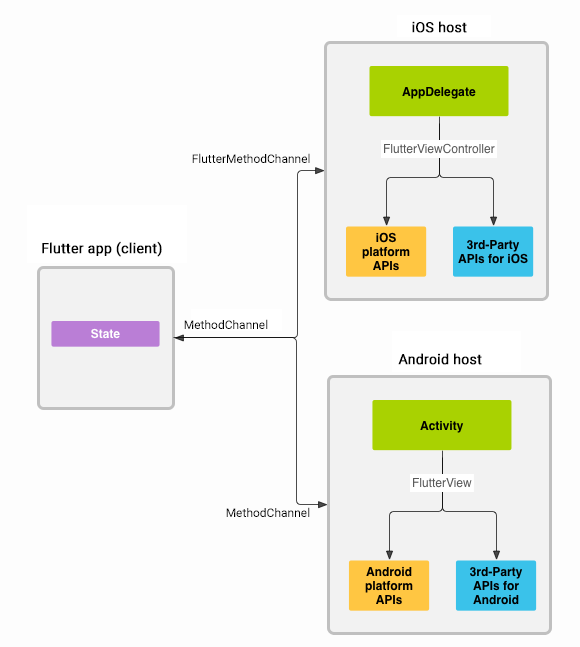

Messages are passed between the client (the app/plugin) and host (platform) using platform channels as illustrated in this diagram:

Messages and responses are passed asynchronously, to ensure the user interface remains responsive. To read more about platform channels go here.

Because this plugin uses platform channels, no Machine Learning processing is done in Flutter/Dart, all the calls are passed to the native platform using

MethodChannelin Android andFlutterMethodChannelin iOS, and executed using Google's native APIs. Think of this plugin as a bridge between your app and Google's native ML Kit APIs. This plugin only passes the call to the native API and the processing is done by Google's API. It is important that you understand this concept when it comes to debugging errors for your ML model and/or app. -

Since the plugin uses platform channels, you may encounter issues with the native API. Before submitting a new issue, identify the source of the issue. You can run both iOS and/or Android native example apps by Google and make sure that the issue is not reproducible with their native examples. If you can reproduce the issue in their apps then report the issue to Google. The authors do not have access to the source code of their native APIs, so you need to report the issue to them. If you find that their example apps are okay and still you have an issue using this plugin, then look at our closed and open issues. If you cannot find anything that can help you then report the issue and provide enough details. Be patient, someone from the community will eventually help you.

Requirements #

iOS #

- Minimum iOS Deployment Target: 15.5

- Xcode 15.3.0 or newer

- Swift 5

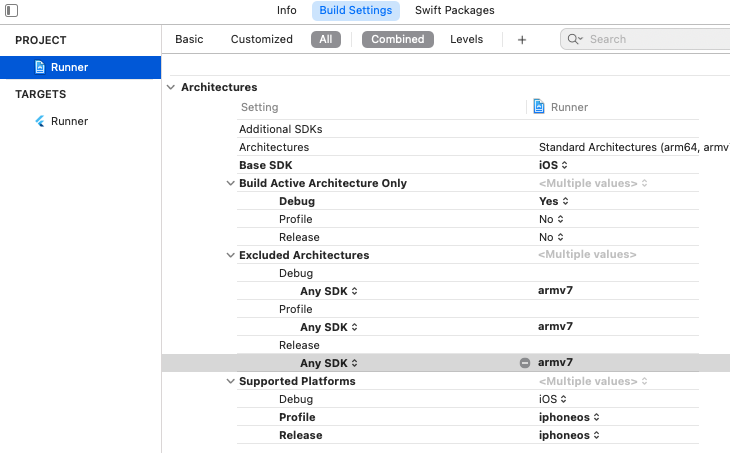

- ML Kit does not support 32-bit architectures (i386 and armv7). ML Kit does support 64-bit architectures (x86_64 and arm64). Check this list to see if your device has the required device capabilities. More info here.

Since ML Kit does not support 32-bit architectures (i386 and armv7), you need to exclude armv7 architectures in Xcode in order to run flutter build ios or flutter build ipa. More info here.

Go to Project > Runner > Building Settings > Excluded Architectures > Any SDK > armv7

Your Podfile should look like this:

platform :ios, '15.5' # or newer version

...

# add this line:

$iOSVersion = '15.5' # or newer version

post_install do |installer|

# add these lines:

installer.pods_project.build_configurations.each do |config|

config.build_settings["EXCLUDED_ARCHS[sdk=*]"] = "armv7"

config.build_settings['IPHONEOS_DEPLOYMENT_TARGET'] = $iOSVersion

end

installer.pods_project.targets.each do |target|

flutter_additional_ios_build_settings(target)

# add these lines:

target.build_configurations.each do |config|

if Gem::Version.new($iOSVersion) > Gem::Version.new(config.build_settings['IPHONEOS_DEPLOYMENT_TARGET'])

config.build_settings['IPHONEOS_DEPLOYMENT_TARGET'] = $iOSVersion

end

end

end

end

Notice that the minimum IPHONEOS_DEPLOYMENT_TARGET is 15.5, you can set it to something newer but not older.

Android #

- minSdkVersion: 21

- targetSdkVersion: 35

- compileSdkVersion: 35

Usage #

Create an instance of InputImage #

Create an instance of InputImage as explained here.

final InputImage inputImage;

Create an instance of ObjectDetector #

// Use DetectionMode.stream when processing camera feed.

// Use DetectionMode.single when processing a single image.

final mode = DetectionMode.stream or DetectionMode.single;

// Options to configure the detector while using with base model.

final options = ObjectDetectorOptions(...);

// Options to configure the detector while using a local custom model.

final options = LocalObjectDetectorOptions(...);

// Options to configure the detector while using a Firebase model.

final options = FirebaseObjectDetectorOptions(...);

final objectDetector = ObjectDetector(options: options);

Process image #

final List<DetectedObject> objects = await objectDetector.processImage(inputImage);

for(DetectedObject detectedObject in objects){

final rect = detectedObject.boundingBox;

final trackingId = detectedObject.trackingId;

for(Label label in detectedObject.labels){

print('${label.text} ${label.confidence}');

}

}

Release resources with close() #

objectDetector.close();

Models #

Object Detection and Tracking can be used with either the Base Model or a Custom Model. The base model is the default model bundled in the SDK, and a custom model can either be bundled with the app as an asset or downloaded from Firebase.

Base model #

To use the base model:

final options = ObjectDetectorOptions(

mode: DetectionMode.stream,

classifyObjects: classifyObjects,

multipleObjects: multipleObjects,

);

final objectDetector = ObjectDetector(options: options);

Local custom model #

Before using a custom model make sure you read and understand the ML Kit's compatibility requirements for TensorFlow Lite models here. To learn how to create a custom model that is compatible with ML Kit go here.

To use a local custom model add the tflite model to your pubspec.yaml:

assets:

- assets/ml/

Add this method:

import 'dart:io';

import 'package:flutter/services.dart';

import 'package:path/path.dart';

import 'package:path_provider/path_provider.dart';

Future<String> getModelPath(String asset) async {

final path = '${(await getApplicationSupportDirectory()).path}/$asset';

await Directory(dirname(path)).create(recursive: true);

final file = File(path);

if (!await file.exists()) {

final byteData = await rootBundle.load(asset);

await file.writeAsBytes(byteData.buffer

.asUint8List(byteData.offsetInBytes, byteData.lengthInBytes));

}

return file.path;

}

Create an instance of [ImageLabeler]:

final modelPath = await getModelPath('assets/ml/object_labeler.tflite');

final options = LocalObjectDetectorOptions(

mode: DetectionMode.stream,

modelPath: modelPath,

classifyObjects: classifyObjects,

multipleObjects: classifyObjects,

);

final objectDetector = ObjectDetector(options: options);

Android Additional Setup

Add the following to your app's build.gradle file to ensure Gradle doesn't compress the model file when building the app:

android {

// ...

aaptOptions {

noCompress "tflite"

// or noCompress "lite"

}

}

Firebase model #

Google's standalone ML Kit library does NOT have any direct dependency with Firebase. As designed by Google, you do NOT need to include Firebase in your project in order to use ML Kit. However, to use a remote model hosted in Firebase, you must setup Firebase in your project following these steps:

iOS Additional Setup

Additionally, for iOS you have to update your app's Podfile.

First, include GoogleMLKit/LinkFirebase and Firebase in your Podfile:

platform :ios, '15.5'

...

# Enable firebase-hosted models #

pod 'GoogleMLKit/LinkFirebase'

pod 'Firebase'

Next, add the preprocessor flag to enable the firebase remote models at compile time. To do that, update your existing build_configurations loop in the post_install step with the following:

post_install do |installer|

installer.pods_project.targets.each do |target|

... # Here are some configurations automatically generated by flutter

target.build_configurations.each do |config|

# Enable firebase-hosted ML models

config.build_settings['GCC_PREPROCESSOR_DEFINITIONS'] ||= [

'$(inherited)',

'MLKIT_FIREBASE_MODELS=1',

]

end

end

end

Usage

To use a Firebase model:

final options = FirebaseObjectDetectorOptions(

mode: DetectionMode.stream,

modelName: modelName,

classifyObjects: classifyObjects,

multipleObjects: classifyObjects,

);

final objectDetector = ObjectDetector(options: options);

Managing Firebase models

Create an instance of model manager

final modelManager = FirebaseObjectDetectorModelManager();

To check if a model is downloaded:

final bool response = await modelManager.isModelDownloaded(modelName);

To download a model:

final bool response = await modelManager.downloadModel(modelName);

To delete a model:

final bool response = await modelManager.deleteModel(modelName);

Example app #

Find the example app here.

Contributing #

Contributions are welcome. In case of any problems look at existing issues, if you cannot find anything related to your problem then open an issue. Create an issue before opening a pull request for non trivial fixes. In case of trivial fixes open a pull request directly.